Automated High Availability in kubeadm v1.15: Batteries Included But Swappable

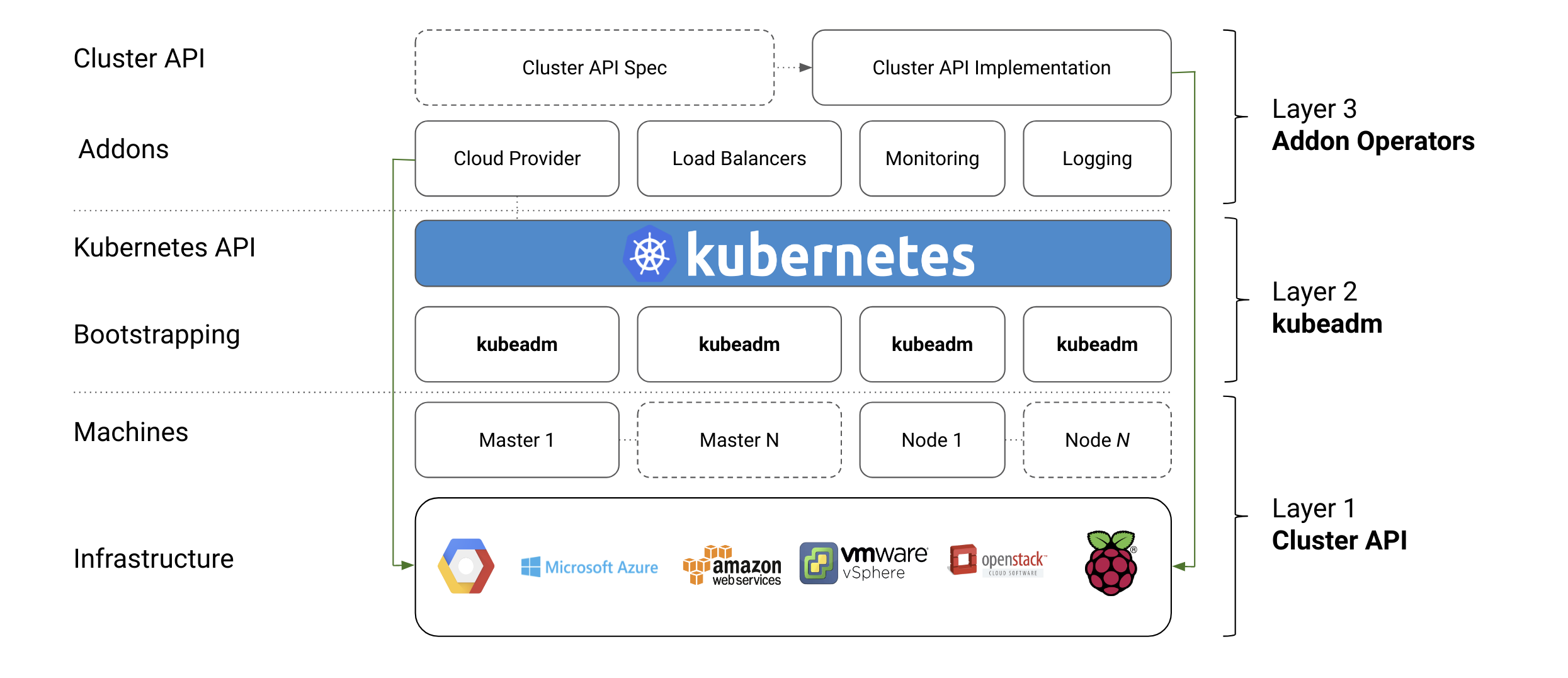

The core of the kubeadm interface is quite simple: new control plane nodes are created by you running kubeadm init, worker nodes are joined to the control plane by you running kubeadm join. Also included are common utilities for managing already bootstrapped clusters, such as control plane upgrades, token and certificate renewal. To keep kubeadm lean, focused, and vendor/infrastructure agnostic, the following tasks are out of scope: Those tasks are addressed by other SIG Cluster Lifecycle projects, such as the Cluster API for infrastructure provisioning and management.

Instead, kubeadm covers only the common denominator in every Kubernetes cluster: the control plane. What’s new in kubeadm v1.15? We are delighted to announce that automated support for High Availability clusters is graduating to Beta in kubeadm v1.15.

Let’s give a great shout out to all the contributors that helped in this effort and to the early adopter users for the great feedback received so far! But how does automated High Availability work in kubeadm? The great news is that you can use the familiar kubeadm init or kubeadm join workflow for creating high availability cluster as well, with the only difference that you have to pass the –control-plane flag to kubeadm join when adding more control plane nodes.

A 3-minute screencast of this feature is here: Set up a Load Balancer. In the config file, set the controlPlaneEndpoint field to where your Load Balancer can be reached at.

Source: kubernetes.io